Use One Big Server

A lot of ink is spent on the "monoliths vs. microservices" debate, but the real issue behind this debate is about whether distributed system architecture is worth the developer time and cost overheads. By thinking about the real operational considerations of our systems, we can get some insight into whether we actually need distributed systems for most things.

We have all gotten so familiar with virtualization and abstractions between our software and the servers that run it. These days, "serverless" computing is all the rage, and even "bare metal" is a class of virtual machine. However, every piece of software runs on a server. Since we now live in a world of virtualization, most of these servers are a lot bigger and a lot cheaper than we actually think.

Meet Your Server

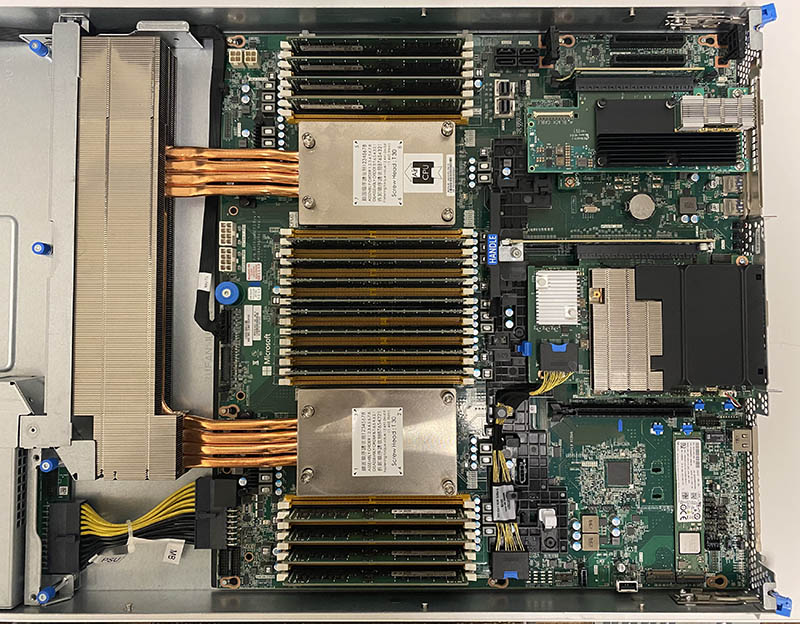

This is a picture of a server used by Microsoft Azure with AMD CPUs. Starting from the left, the big metal fixture on the left (with the copper tubes) is a heatsink, and the metal boxes that the copper tubes are attached to are heat exchangers on each CPU. The CPUs are AMD's third generation server CPU, each of which has the following specifications:

- 64 cores

- 128 threads

- ~2-2.5 GHz clock

- Cores capable of 4-6 instructions per clock cycle

- 256 MB of L3 cache

In total, this server has 128 cores with 256 simultaneous threads. With all of the cores working together, this server is capable of 4 TFLOPs of peak double precision computing performance. This server would sit at the top of the top500 supercomputer list in early 2000. It would take until 2007 for this server to leave the top500 list. Each CPU core is substantially more powerful than a single core from 10 years ago, and boasts a much wider computation pipeline.

Above and below each CPU is the memory: 16 slots of DDR4-3200 RAM per socket. The largest capacity "cost effective" DIMMs today are 64 GB. Populated cost-efficiently, this server can hold 1 TB of memory. Populated with specialized high-capacity DIMMs (which are generally slower than the smaller DIMMs), this server supports up to 8 TB of memory total. At DDR4-3200, with a total of 16 memory channels, this server will likely see ~200 Gbps of memory throughput across all of its cores.

In terms of I/O, each CPU offers 64 PCIe gen 4 lanes. With 128 PCIe lanes total, this server is capable of supporting 30 NVMe SSDs plus a network card. Typical configurations you can buy will offer slots for around 16 SSDs or disks. The final thing I wanted to point out in this picture is in the top right, the network card. This server is likely equipped with a 50-100 Gbps network connection.

The Capabilities of One Server

One server today is capable of:

- Serving video files at 400 Gbps (now 800 Gbps)

- 1 million IOPS on a NoSQL database

- 70k IOPS in PostgreSQL

- 500k requests per second to nginx

- Compiling the linux kernel in 20 seconds

- Rendering 4k video with x264 at 75 FPS

Among other things. There are a lot of public benchmarks these days, and if you know how your service behaves, you can probably find a similar benchmark.

The Cost of One Server

In a large hosting provider, OVHCloud, you can rent an HGR-HCI-6 server with similar specifications to the above, with 128 physical cores (256 threads), 512 GB of memory, and 50 Gbps of bandwidth for $1,318/month.

Moving to the popular budget option, Hetzner, you can rent a smaller server with 32 physical cores and 128 GB of RAM for about €140.00/month. This is a smaller server than the one from OVHCloud (1/4 the size), but it gives you some idea of the price spread between hosting providers.

In AWS, one of the largest servers you can rent is the m6a.metal server. It offers 50 Gbps of network bandwidth, 192 vCPUs (96 physical cores), and 768 GB of memory, and costs $8.2944/hour in the US East region. This comes out to $6,055/month. The cloud premium is real!

A similar server, with 128 physical cores and 512 GB of memory (as well as appropriate NICs, SSDs, and support contracts), can be purchased from the Dell website for about $40,000. However, if you are going to spend this much on a server, you should probably chat with a salesperson to make sure you are getting the best deal you can. You will also need to pay to host this server and connect it to a network, though.

In comparison, buying servers takes about 8 months to break even compared to using cloud servers, and 30 months to break even compared to renting. Of course, buying servers has a lot of drawbacks, and so does renting, so going forward, we will think a little bit about the "cloud premium" and whether you should be willing to pay it (spoiler alert: the answer is "yes, but not as much as the cloud companies want you to pay").

Thinking about the Cloud

The "cloud era" began in earnest around 2010. At the time, the state of the art CPU was an 8-core Intel Nehalem CPU. Hyperthreading had just begun, so that 8-core CPU offered a whopping 16 threads. Hardware acceleration was about to arrive for AES encryption, and vectors were 128 bits wide. The largest CPUs had 24 MB of cache, and your server could fit a whopping 256 GB of DDR3-1066 memory. If you wanted to store data, Seagate had just begun to offer a 3 TB hard drive. Each core offered 4 FLOPs per cycle, meaning that your 8-core server running at 2.5 GHz offered a blazing fast 80 GFLOPs.

The boom in distributed computing rode on this wave: if you wanted to do anything that involved retrieval of data, you needed a lot of disks to get the storage throughput you want. If you wanted to do large computations, you generally needed a lot of CPUs. This meant that you needed to coordinate between a lot of CPUs to get most things done.

Since that time began, the size of servers has increased a lot, and SSDs have increased available IOPS by a factor of at least 100, but the size of mainstream VMs and containers hasn't increased much, and we still use virtualized drives that perform more like hard drives than SSDs (although this gap is closing).

One Server (Plus a Backup) is Usually Plenty

If you are doing anything short of video streaming, and you have under 10k QPS, one server will generally be fine for most web services. For really simple services, one server could even make it to a million QPS or so. Very few web services get this much traffic - if you have one, you know about it. Even if you're serving video, running only one server for your control plane is very reasonable. A benchmark can help you determine where you are. Alternatively, you can use common benchmarks of similar applications, or tables of common performance numbers to estimate how big of a machine you might need.

Tall is Better than Wide

When you need a cluster of computers, if one server is not enough, using fewer larger servers will often be better than using a large fleet of small machines. There is non-zero overhead to coordinate a cluster, and that overhead is frequently O(n) on each server. To reduce this overhead, you should generally prefer to use a few large servers than to use many small servers. In the case of things like serverless computing, where you allocate tiny short-lived containers, this overhead accounts for a large fraction of the cost of use. On the other extreme end, coordinating a cluster of one computer is trivial.

Big Servers and Availability

The big drawback of using a single big server is availability. Your server is going to need downtime, and it is going to break. Running a primary and a backup server is usually enough, keeping them in different datacenters. A 2x2 configuration should appease the truly paranoid: two servers in a primary datacenter (or cloud provider) and two servers in a backup datacenter will give you a lot of redundancy. If you want a third backup deployment, you can often make that smaller than your primary and secondary.

However, you may still have to be concerned about correlated hardware failures. Hard drives (and now SSDs) have been known to occasionally have correlated failures: if you see one disk fail, you are a lot more likely to see a second failure before getting back up if your disks are from the same manufacturing batch. Services like Backblaze overcome this by using many different models of disks from multiple manufacturers. Hacker news learned this the hard way recently when the primary and backup server went down at the same time.

If you are using a hosting provider which rents pre-built servers, it is prudent to rent two different types of servers in each of your primary and backup datacenters. This should avoid almost every failure mode present in modern systems.

Use the Cloud, but don't be too Cloudy

A combination of availability and ease of use is one of the big reasons why I (and most other engineers) like cloud computers. Yes, you pay a significant premium to rent the machines, but your cloud provider has so much experience building servers that you don't even see most failures, and for the other failures, you can get back up and running really quickly by renting a new machine in their nearly-limitless pool of compute. It is their job to make sure that you don't experience downtime, and while they don't always do it perfectly, they are pretty good at it.

Hosting providers who are willing to rent you a server are a cheaper alternative to cloud providers, but these providers can sometimes have poor quality and some of them don't understand things like network provisioning and correlated hardware failures. Also, moving from one rented server to a larger one is a lot more annoying than resizing a cloud VM. Cloud servers have a price premium for a good reason.

However, when you deal with clouds, your salespeople will generally push you towards "cloud-native" architecture. These are things like microservices in auto-scaling VM groups with legions of load balancers between them, and vendor-lock-in-enhancing products like serverless computing and managed high-availability databases. There is a good reason that cloud salespeople are the ones pushing "cloud architecture" - it's better for them!

The conventional wisdom is that using cloud architecture is good because it lets you scale up effortlessly. There are good reasons to use cloud-native architecture, but serving lots of people is not one of them: most services can serve millions of people at a time with one server, and will never give you a surprise five-figure bill.

Why Should I Pay for Peak Load?

One common criticism of the "one big server" approach is that you now have to pay for your peak usage instead of paying as you go for what you use. Thus, serverless computing or fleets of microservice VMs more closely align your costs with your profit.

Unfortunately, since all of your services run on servers (whether you like it or not), someone in that supply chain is charging you based on their peak load. Part of the "cloud premium" for load balancers, serverless computing, and small VMs is based on how much extra capacity your cloud provider needs to build in order to handle their peak load. You're paying for someone's peak load anyway!

This means that if your workload is exceptionally bursty - like a simulation that needs to run once and then turn off forever - you should prefer to reach for "cloudy" solutions, but if your workload is not so bursty, you will often have a cheaper system (and an easier time building it) if you go for few large servers. If your cloud provider's usage is more bursty than yours, you are going to pay that premium for no benefit.

This premium applies to VMs, too, not just cloud services. However, if you are running a cloud VM 24/7, you can avoid paying the "peak load premium" by using 1-year contracts or negotiating with a salesperson if you are big enough.

Generally, the burstier your workload is, the more cloudy your architecture should be.

How Much Does it Cost to be Cloudy?

Being cloudy is expensive. Generally, I would anticipate a 5-30x price premium depending on what you buy from a cloud company, and depending on the baseline. Not 5-30%, a factor of between 5 and 30.

Here is the pricing of AWS lambda: $0.20 per 1M requests + $0.0000166667 per GB-second of RAM. I am using pricing for an x86 CPU here to keep parity with the m6a.metal instance we saw above. Large ARM servers and serverless ARM compute are both cheaper.

Assuming your server costs $8.2944/hour, and is capable of 1k QPS with 768 GB of RAM:

-

1k QPS is 60k queries per minute, or 3.6M queries per hour

-

Each query here gets 0.768 GB-seconds of RAM (amortized)

-

Replacing this server would cost about $46/hour using serverless computing

The price premium for serverless computing over the instance is a factor of 5.5. If you can keep that server over 20% utilization, using the server will be cheaper than using serverless computing. This is before any form of savings plan you can apply to that server - if you can rent those big servers from the spot market or if you compare to the price you can get with a 1-year contract, the price premium is even higher.

If you compare to the OVHCloud rental price for the same server, the price premium of buying your compute through AWS lambda is a factor of 25

If you are considering renting a server from a low-cost hosting provider or using AWS lambda, you should prefer the hosting provider if you can keep the server operating at 5% capacity!

Also, note that the actual QPS number doesn't matter: if the $8.2944/hour server is capable of 100k QPS, the query would use 100x less memory-time, meaning that you would arrive at the same 5.5x (or 25x) premium. Of course, you should scale the size of the server to fit your application.

Common Objections to One Big Server

If you propose using the one big server approach, you will often get pushback from people who are more comfortable with the cloud, prefer to be fashionable, or have legitimate concerns. Use your judgment when you think about it, but most people vastly underestimate how much "cloud architecture" actually costs compared to the underlying compute. Here are some common objections.

But if I use Cloud Architecture, I Don't Have to Hire Sysadmins

Yes you do. They are just now called "Cloud Ops" and are under a different manager. Also, their ability to read the arcane documentation that comes from cloud companies and keep up with the corresponding torrents of updates and deprecations makes them 5x more expensive than system administrators.

But if I use Cloud Architecture, I Don't Have to Do Security Updates

Yes you do. You may have to do fewer of them, but the ones you don't have to do are the easy ones to automate. You are still going to share in the pain of auditing libraries you use, and making sure that all of your configurations are secure.

But if I use Cloud Architecture, I Don't Have to Worry About it Going Down

The "high availability" architectures you get from using cloudy constructs and microservices just about make up for the fragility they add due to complexity. At this point, if you use two different cloud regions or two cloud providers, you can generally assume that is good enough to avoid your service going down. However, cloud providers have often had global outages in the past, and there is no reason to assume that cloud datacenters will be down any less often than your individual servers.

Remember that we are trying to prevent correlated failures. Cloud datacenters have a lot of parts that can fail in correlated ways. Hosting providers have many fewer of these parts. Similarly, complex cloud services, like managed databases, have more failure modes than simple ones (VMs).

But I can Develop More Quickly if I use Cloud Architecture

Then do it, and just keep an eye on the bill and think about when it's worth it to switch. This is probably the strongest argument in favor of using cloudy constructs. However, if you don't think about it as you grow, you will likely end up burning a lot of money on your cloudy architecture long past the time to switch to something more boring.

My Workload is Really Bursty

Cloud away. That is a great reason to use things like serverless computing. One of the big benefits of cloud architecture constructs is that the scale down really well. If your workload goes through long periods of idleness punctuated with large unpredictable bursts of activity, cloud architecture probably works really well for you.

What about CDNs?

It's impossible to get the benefits of a CDN, both in latency improvements and bandwidth savings, with one big server. This is also true of other systems that need to be distributed, like backups. Thankfully CDNs and backups are competitive markets, and relatively cheap. These are the kind of thing to buy rather than build.

A Note On Microservices and Monoliths

Thinking about "one big server" naturally lines up with thinking about monolithic architectures. However, you don't need to use a monolith to use one server. You can run many containers on one big server, with one microservice per container. However, microservice architectures in general add a lot of overhead to a system for dubious gain when you are running on one big server.

Conclusions

When you experience growing pains, and get close to the limits of your current servers, today's conventional wisdom is to go for sharding and horizontal scaling, or to use a cloud architecture that gives you horizontal scaling "for free." It is often easier and more efficient to scale vertically instead. Using one big server is comparatively cheap, keeps your overheads at a minimum, and actually has a pretty good availability story if you are careful to prevent correlated hardware failures. It's not glamorous and it won't help your resume, but one big server will serve you well.